The meteoric rise of Chatbots, and the lurking risks

As OpenAI’s ChatGPT rocketed into the public consciousness, many questions have emerged about the technology, especially by lawmakers and tech companies. In this special by 360info, we delve into the influence chatbots and AI is already having on our lives, the “hallucinations” they have and how there might be too much of a good thing.

When governments use AI to predict what the people want

Using artificial intelligence to predict the behaviour of communities can veer close to surveillance. (Dorieo, Wikimedia) Credits CC 4.0

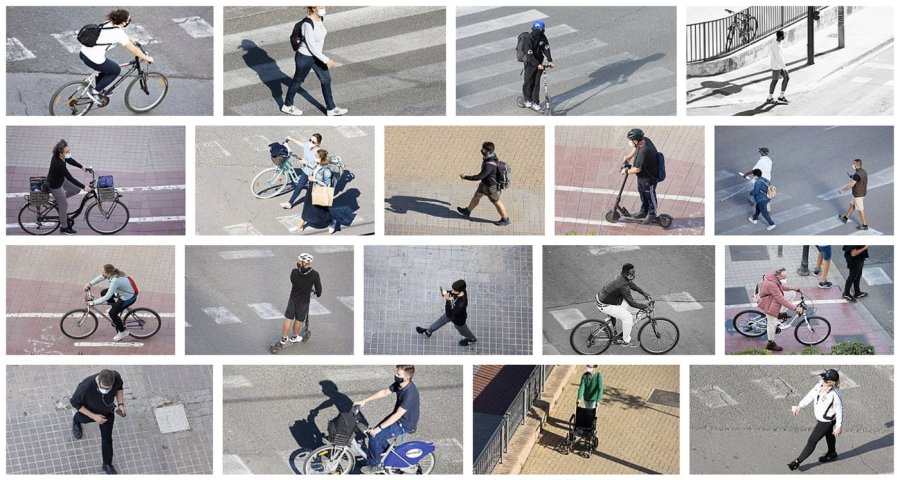

MADRID – The recent exponential growth in the use of AI has seen the new field of behavioural data science emerge, which combines techniques from a plethora of disciplines to understand and predict human behaviour using AI.

While this predictive power can be deployed to better design and implement policy, privacy concerns are growing. As more data is obtained from citizens, predictions may soon reach similar levels of effectiveness as that of observations, raising concerns around state surveillance.

Governments with this kind of intelligence could risk breaching privacy and impeding free decision-making in society.

Chatbot revolution risks becoming a race of the reckless

OpenAI, the developer of ChatGPT, has released their latest model, which appears to be their most ambitious public release yet. (Jonathan Kemper, Unsplash)

SYDNEY – For the last few years, tech companies have developed ethical frameworks for the responsible deployment of AI, hired teams of scientists to oversee the application of these frameworks, and pushed back against calls to regulate their activities.

But commercial pressure appears to be changing all that. There is a fast-opening chasm between what technology companies are disclosing and what their products can do that can only be closed by government action.

If these organisations are going to be less transparent and act more recklessly, then it falls upon the government to act. Expect regulation.

Can we trust machines doing the news?

As we come to rely more on AI-generated information in everyday settings, the question of trust becomes more important. (Robert Scoble via Flickr) Credits CCBY2.0

BOSTON – As we come to rely more on AI-generated information in everyday settings, the question of trust becomes more important.

Research examined how disclosing use of AI in news generation affected news accuracy perceptions. The results strongly corroborated the AI aversion account: disclosing the use of AI led people to believe news items substantially less, a negative effect explained by lower trust toward AI reporters.

Media outlets are faced not only with the challenges of grabbing the attention of readers in a highly competitive digital marketplace, but also of earning their trust.

Elon’s Twitter ripe for a misinformation avalanche

Misinformation on Twitter is rising under the watch of new CEO Elon Musk. (U.S. Air Force photo by Van Ha, Wikimedia Commons) Credits Public domain

BRISBANE – As online trickery and misinformation surges, the armour that platforms built against it are being stripped away.

Since Elon Musk’s takeover of Twitter, he has trashed the platform’s online safety division and as a result, misinformation is back on the rise. Musk, like others, looks to technological fixes to solve his problems. He’s already signalled a plan for upping use of AI for Twitter’s content moderation.

But this isn’t sustainable nor scalable, and is unlikely to be the silver bullet.