January 2, 2025

SINGAPORE – Local film-maker Chai Yee Wei is only just dipping his toes into the waters of artificial intelligence (AI). But he is excited by its potential to give creators the ability to do things that were once deemed impossible.

“How do you get a dog to whine and bark the way you want? You probably can’t,” says the 48-year-old director.

Instead of trying to coax a dog actor into making the right sounds, he might turn to AI software that transforms a human actor’s voice into any dog vocalisation he can think of, says the man behind the mainly Hokkien-language drama Wonderland (2023).

And it is not just uncannily accurate animal sounds that AI can generate.

The science-fiction prequel Alien: Romulus (2024) features an appearance by the android character Rook, who looks and speaks like the late British actor Ian Holm. The actor, who died in 2020, played a similar android in the original 1979 film Alien.

The Holm lookalike that audiences see in Alien: Romulus was created with animatronics, computer graphics and an AI model that can transform an actor’s voice into any other person’s.

With AI voices, Chai says he will never have to worry if an actor is unable to attend voice-dubbing sessions, a common practice in film-making. As long as he has the actor’s permission, he will be free to change dialogue after shooting or salvage dialogue tracks ruined by background noise.

The potential does not stop at sound. Visual effects shots can be created by software that can analyse and manipulate elements in an image, including separating characters from the background, therefore creating endless possibilities for landscapes and settings.

“This is potentially as disruptive as the birth of the World Wide Web. What used to take a whole visual effects team weeks can now be done by one person in a day,” Chai says.

This practical application of AI represents how media producers, writers and other creative professionals in Singapore are finding real-world uses for it.

Local author Pugalenthi Sr, 62, who wrote AI-enabled interactive horror stories for the National Library Board, says that AI-assisted storytelling gives writers the ability to reach new audiences. He has penned local and regional folk tale, mystery and suspense works, including Myths And Legends Of Singapore (1991) and Tales Of Fear (1993).

“The younger generation who are digitally savvy do not have leisure reading as one of their top priorities. As a storyteller, I have to reinvent my ways of sharing stories and use all tools available to tell my tale. AI, as I see it, is an additional tool to make my storytelling better,” he says.

Reality check

For Singapore animation studio Robot Playground Media, however, the promise of AI requires careful verification against practical realities.

Studio head Ervin Han, 49, says the litmus test of whether AI is useful for creating animation is if its output meets quality standards without too much human intervention.

“If we have to spend more man-hours fixing what AI generates than doing it ourselves in the first place, then AI has failed,” says the company’s co-founder and chief executive.

The studio produced Downstairs (2019 to 2023), an award-winning comedy animation series for adults broadcast on Mediacorp, and is also producing 2D feature film The Violinist. The Singapore-Spain co-production is co-directed by Han and Spanish animation veteran Raul Garcia, and set in World War II-era Malaya. It is slated for a 2026 release.

Robot Playground Media tests AI tools extensively but uses them primarily for pre-production work, finding them not yet ready for theatre-quality animation. Some AI tools were used in the development and pre-production workflows of The Violinist.

Pugalenthi Sr points to AI’s creative limitations.

“AI can only extrapolate from what was created by humans, but it cannot create something new. It can refer to the van Gogh style of painting and add a Picasso-esque touch, but it cannot create an art piece that is on a par with that of van Gogh or Picasso,” he says.

Legal landscape

As with all innovations, the benefits created by AI come with pitfalls.

At a panel discussion on generative AI in content creation at the Singapore Media Festival in early December 2024, Professor Simon Chesterman, senior director of AI governance at industry organisation AI Singapore, says AI may force creative professionals to rethink how they protect copyright and earn a living.

Online store Amazon had to impose limits on the number of books an author could list for sale because AI-assisted writers were producing hundreds of books a day, he says, giving an example of AI’s capability to flood the market.

He draws a comparison between the potential impact of AI in creative industries and the way streaming services such as Spotify or Apple Music altered the way music reached consumers, so that artistes now depend on touring to make money.

If AI takes over entry-level tasks in media production, as some have predicted will happen, the industry will be bereft of new talent.

“If you take away those jobs, where are the senior people going to come from, if no one cuts their teeth on hours and hours doing editing, coming up with the perfect shot?” asks Prof Chesterman, who is also the David Marshall Professor of Law and vice-provost (educational innovation) at the National University of Singapore.

Institutional support

Between the creative possibilities and legal concerns, Singapore’s institutions are working to find a balanced approach to AI adoption.

The Infocomm Media Development Authority of Singapore (IMDA), for example, provides funding to encourage the industry to experiment with and take up the technology.

“We also train and upskill our talent to ensure they are ready to embrace these opportunities. IMDA recognises that Gen AI offers exciting opportunities but is evolving rapidly. As such, we will work closely with the industry to ensure responsible use of generative AI, and to foster a safe environment for exploring this technology,” says an IMDA spokesperson in response to queries from The Straits Times.

Meanwhile, content creators are already looking towards the next phase.

Jack Neo, Singapore’s most commercially successful film-maker, will release his new film I Want To Be Boss on Jan 24 ahead of Chinese New Year. The story is set in a Singapore where AI is commonplace. Neo, Henry Thia, Aileen Tan and Patricia Mok star in the story about a restaurant owner who buys an AI robot servant, and his predicament after an apprentice becomes a business rival.

And fittingly, Neo says he is experimenting with AI to create certain shots for the film and compose its songs, with lyrics written by him.

The 64-year-old veteran predicts that AI will soon be able to help film-makers achieve more with less, especially in creating big visual effects scenes.

“At this stage, AI scenes still look like animation, but I can see it improving over time,” he says.

Even Han, who expressed scepticism over AI’s ability to create animation that meets his standards, believes that it would be wise to keep tabs on the technology.

“It is something we have to reckon with and prepare for. There’s no point being fearful of it. For now, I still feel my creative team is indispensable. But we can prepare them for a slightly different kind of role in the future.”

How AI is changing content creation in Singapore

Sound production

Mocha Chai Laboratories is using AI innovations in sound design. They transform the human voice into realistic animal sounds, particularly for hard-to-direct creatures such as dogs.

The post-production facility also uses AI to generate fabric movement sounds and clean up noisy dialogue recordings. When actors are unavailable for re-recording, AI helps recreate their voices for new lines of dialogue.

“What used to be impossible is now just a matter of speaking into a microphone,” says founder and film-maker Chai Yee Wei.

Pre-production

Chai’s team uses AI tools during client pitches, creating instant visualisations that can be modified in real time during meetings. The technology also separates characters from backgrounds automatically for quick edits, turning what was weeks of visual effects work for a team into a day’s job for one person.

Public engagement

The National Library Board in October 2024 at Haw Par Villa offered an artificial intelligence-driven horror narrative adventure with the help of StoryGen (Horror Edition). StoryGen creates interactive storytelling experiences in which visitors can customise stories. PHOTO: NATIONAL LIBRARY BOARD/THE STRAITS TIMES

The National Library Board (NLB) has collaborated with Amazon Web Services to develop two main AI prototypes. StoryGen creates interactive storytelling experiences in which visitors can customise stories in real time, while ChatBook understands conversational nuances and generates tailored responses useful to researchers and lay readers.

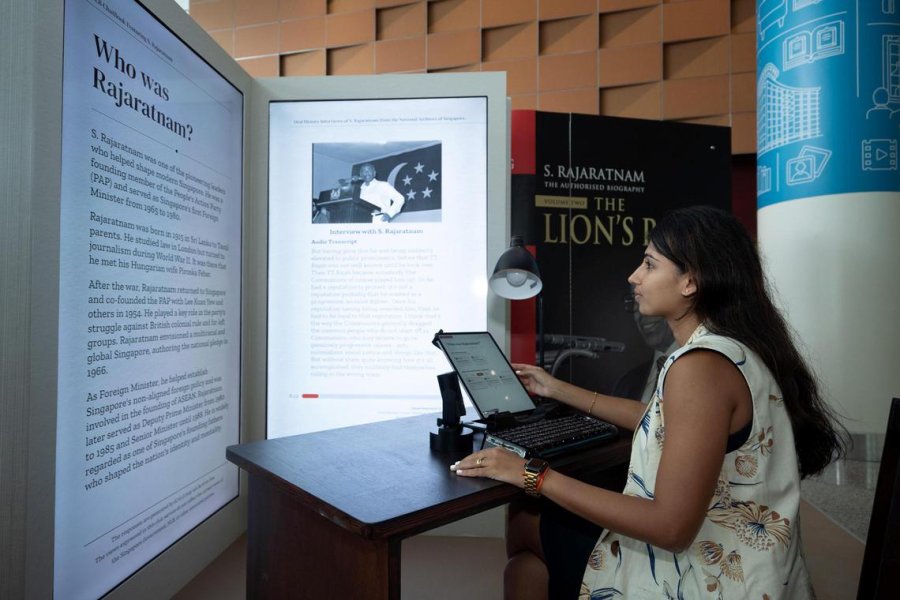

When processing writer Irene Ng’s two-volume authorised biography about one of Singapore’s founding fathers, S. Rajaratnam, ChatBook transformed more than 1,300 pages of material into interactive conversations that felt natural and engaging. The AI was also trained on other resources from NLB and the National Archives of Singapore.

StoryGen lets users customise classic tales using AI. For example, visitors can watch scenes from The Boy And The Garfish, a story from historical text Malay Annals (Sejarah Melayu), come alive through AI-generated visuals or reimagine the story in different genres, such as horror or science fiction.

Commercial production

Singapore start-up Dear.AI is creating AI-generated commercials that eliminate the need for physical shoots. The company generates entire scenes, including locations and talents, through AI tools. It is working on the Jack Neo-directed film, I Want To Be Boss, and training industry professionals to integrate AI into existing workflows, helping videographers and editors adapt to new technologies.

Mainstream film

I Want To Be Boss marks one of Singapore’s first attempts to incorporate AI into commercial cinema. The production team is exploring AI for several elements – generating original songs in which AI creates melodies to match human-written lyrics, testing high-resolution visual sequences and exploring graphic elements.

AI by the numbers: Tracking the transformation

Production speed

Anime series Unleashing Chaos In The Arcane Realm by media company COL Group that was created with the help of AI tools, and will be available on Mediacorp’s mewatch in January. PHOTOS: COL WEB/THE STRAITS TIMES

“We generated 24 anime titles within two months. In comparison, traditionally produced anime titles like One Piece or Naruto may take two months or more an episode.” – Bryan Tan, chief executive of COL Web, a Singapore-based media company and branch of China-headquartered COL Group. The group’s anime series created with AI assistance, including The Celestial Lord’s Second Life In The City and Unleashing Chaos In The Arcane Realm, will air on Mediacorp’s digital platform mewatch in January.

“The Coca-Cola commercial was done by about 17 artists from different parts of the world in three weeks. If that was done traditionally, to reach that level of quality, I would imagine it would be at least three times the amount of staffing and probably up to six months of work.” – Ervin Han, chief executive of animation and visual effects studio Robot Playground Media, on a recent AI-created Coca-Cola commercial that sparked online outrage.

“What used to take a visual effects team weeks can now be done by one person in a day.” – Chai Yee Wei, film-maker and founder of Mocha Chai Laboratories, on using AI tools that separate characters from backgrounds, allowing for image manipulation.

Books that chat

ChatBook understands conversational nuances and generates tailored responses useful to researchers and lay readers. PHOTO: NATIONAL LIBRARY BOARD/THE STRAITS TIMES

“When I tested ChatBook with questions, it was like having a conversation with a knowledgeable friend who has read both my books. It was amazing to encounter an AI-powered companion capable of understanding and responding to my queries within seconds.” – Irene Ng, 61, biographer, former The Straits Times senior political correspondent and Member of Parliament. From July to November 2024, visitors to the National Library Building could converse with the ChatBook prototype, with responses based on resources such as both volumes of Ms Ng’s authorised biography of S. Rajaratnam, totalling over 1,300 pages. The prototype is now available at Punggol Regional Library until late March.

Career evolution

“With AI, we are creating more content using the same resources. The same 50-person team that in the same time frame used to create 10 anime titles without AI can now produce 50 titles with AI. We’re looking at training a whole ecosystem of artists, helping them understand and leverage AI to do their work more efficiently, to achieve a higher quality output”. – Bryan Tan, chief executive of COL Web