January 20, 2025

SEOUL – The AI revolution that’s been shaking up Hollywood for the past few years has made its way to South Korean shores. From remastering classics to creating productions entirely through generative AI, the local film industry is starting to test out using AI tools for experimental projects.

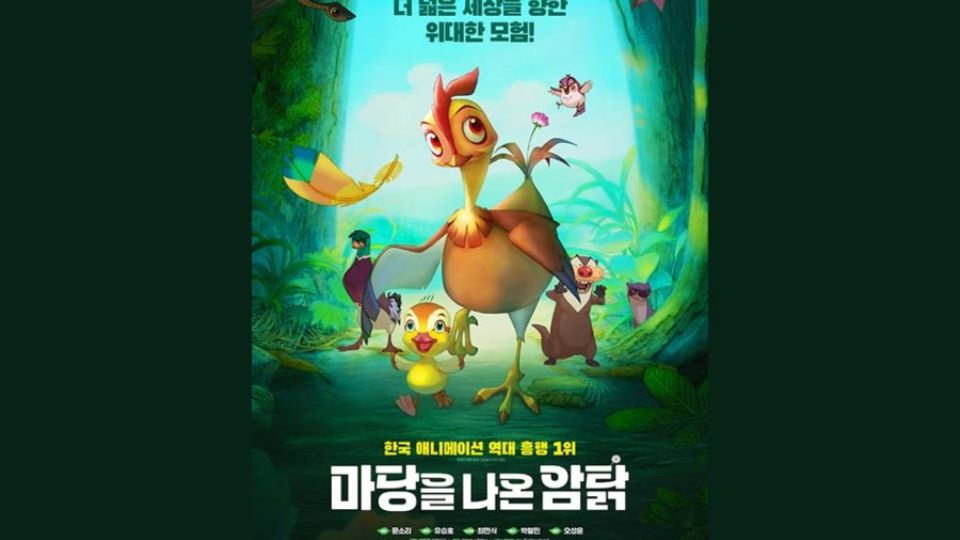

This week brings a remastered version of “Leafie, a Hen into the Wild.” The 2011 animated feature gets new life through an AI remaster from Inshorts, a local tech startup launched just last year whose “AI Super-scaler” tech claims to transform the visuals into crisp 4K quality.

For those familiar with Korean cinema, “Leafie” is nothing short of a landmark work in Korean animation. Adapted from Hwang Sun-mi’s bestselling fable “The Hen Who Dreamed She Could Fly,” this heart-wrenching tale of an escaped battery hen who ends up raising a baby duck pulled in 2.2 million viewers at theaters. It remains Korea’s most successful homegrown animation to this date. (For context, Disney’s $200 million “Mufasa: The Lion King” has been in theaters since Dec. 18 but has so far reached only 820,000 viewers here.)

The AI restoration brings striking clarity to the original animation. Scenes of the wilderness — forest groves, lakeside vistas and snowy expanses — once subdued, now burst with rich natural hues. Fine details, from the intricate patterns in the characters’ eyes to the varied textures of their beaks, show enhanced definition without appearing too artificial.

The remastering process has come a long way since the late 1990s, when teams of engineers manually examined films frame by frame. Back then, they would search for blemishes in the original negative — scratches, dirt, water stains — and painstakingly patch each one using information from clean frames.

Gone are those days of pixel-by-pixel drudgery. Today, AI models draw on vast online video archives — from both open-source collections and studio partnerships — to learn what pristine footage should look like. They can process entire films in a single pass, examining each frame and using pattern recognition to rebuild the missing details with precision.

In an interview with a local broadcaster, Inshorts founder and CEO Andy Lee claimed that the company’s AI scaleup technology “cuts time by 10 times and costs by half.”

Korea’s filmmakers aren’t stopping at restoration, though. December saw two unprecedented attempts with generative AI hit theaters. “It’s Me, Mun-hee,” released on Christmas Eve, starred veteran actress Na Mun-hee without her ever stepping on set. Throughout the 17-minute short, generative AI conjures the 84-year-old’s face and voice across five episodes, transforming her into the most unlikely array of characters — from Santa Claus and a CIA fugitive to an astronaut.

A scene from “It’s Me, Mun-hee.” PHOTO: MCA ENTERTAINMENT/THE KOREA HERALD

Taking an even bolder step into AI technology was “M Hotel,” which premiered Dec. 11. The 6.5-minute short dispenses with human actors entirely, using generative AI to craft the story of a homeless person who discovers a mysterious hotel key. The project has gained international recognition, making the shortlist at Venice’s Reply AI Film Festival and winning awards at the Busan International Artificial Intelligence Film Festival, the AMT International Film Festival in New York and the World Film Festival in Cannes. According to reports, it took less than a month for four CJ ENM AI experts to complete the entire production using over 10 different AI tools.

These experimental shorts share some common traits — they both run under 20 minutes and feel more like tech demos than actual films. But it’s the human faces that caught viewers’ attention. Critics were quick to point out how the AI-generated characters look unnaturally polished, with skin textures that appear too smooth and perfect. Elderly characters were particularly prone to having this artificial gloss, creating an uncanny feel that some viewers found grotesque and off-putting.

If this criticism rings a bell, it’s because Hollywood just went through its own AI makeover controversy. When James Cameron’s action classics “True Lies” and “The Abyss” got the machine-learning treatment last year, reactions were very mixed. While the new versions popped with never-before-seen clarity, many viewers balked at the ultra-polished look, where familiar faces and surfaces seemed stripped of their original texture and took on an almost plastic sheen.